Automatic Multiview Synthesis – Towards a Mobile System on a Chip

In this paper, we present a complete hardware system for fully automatic MVS.

December 13, 2015

International Conference on Visual Communications and Image Processing (VCIP) 2015

Authors

Michael Schaffner (Disney Research/ETH Joint PhD)

Frank Gürkaynak (ETH Zurich)

Hubert Kaeslin (ETH Zurich)

Luca Benini (ETH Zurich/Università di Bologna)

Aljoscha Smolic (Disney Research)

Automatic Multiview Synthesis – Towards a Mobile System on a Chip

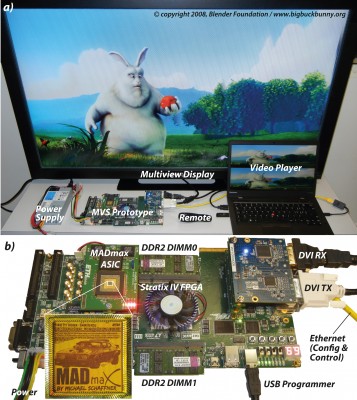

Over the last couple of years, multiview autostereoscopic displays (MADs) have become commercially available which enable a limited glasses-free 3D experience. The main problem of MADs is that they require several (typically 8 or 9) views, while most of the 3D video content is in stereoscopic 3D (S3D) today. In order to bridge this gap, the research community started to devise automatic multiview synthesis (MVS) methods. These algorithms require real-time processing and should be portable to end-user devices to develop their full potential. In this paper, we present a complete hardware system for fully automatic MVS. We give an overview of the algorithmic flow and corresponding hardware architecture and provide implementation results of a hybrid FPGA/ASIC prototype – which is the first complete hardware system implementing image-domain-warping-based MVS. The proposed hardware IP could be used as a co-processor in a system-on-chip (SoC) targeting 3D TV sets, thereby enabling efficient content generation in real-time.