Globally Continuous and Non-Markovian Activity Analysis from Videos

By using non-parametric Bayesian methods, we learn coupled spatial and temporal patterns with minimum prior knowledge.

September 15, 2016

European Conference on Computer Vision (ECCV) 2016

Authors

He Wang (Disney Research)

Carol O’Sullivan (Disney Research)

Globally Continuous and Non-Markovian Activity Analysis from Videos

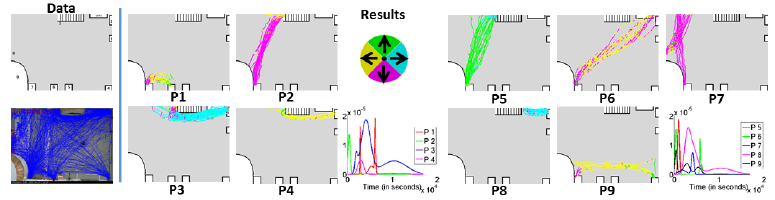

Automatically recognizing activities in video is a classic problem in vision and helps to understand behaviors, describe scenes and detect anomalies. We propose an unsupervised method for such purposes. Given video data, we discover recurring activity patterns that appear, peak, wane and disappear over time. By using non-parametric Bayesian methods, we learn coupled spatial and temporal patterns with minimum prior knowledge. To model the temporal changes of patterns, previous works compute Markovian progressions or locally continuous motifs whereas we model time in a globally continuous and non-Markovian way. Visually, the patterns depict flows of major activities. Temporally, each pattern has its own unique appearance-disappearance cycles. To compute compact pattern representations, we also propose a hybrid sampling method. By combining these patterns with detailed environment information, we interpret the semantics of activities and report anomalies. Also, our method fits data better and detects anomalies that were difficult to detect previously.