Dynamic Word Embeddings

We present a probabilistic language model for time-stamped text data which tracks the semantic evolution of individual words over time.

August 6, 2017

International Conference on Machine Learning (ICML) 2017

Authors

Robert Bamler (Disney Research)

Stephan Mandt (Disney Research)

Dynamic Word Embeddings

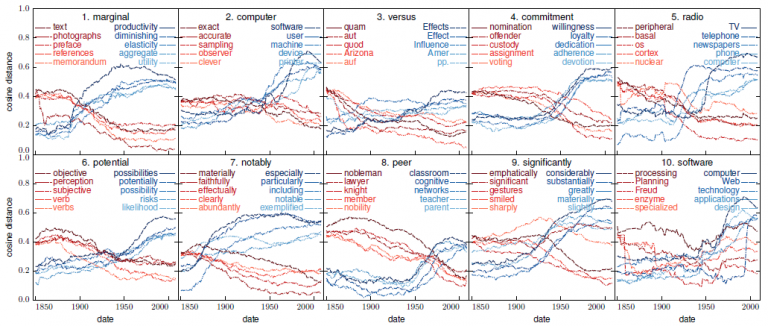

We present a probabilistic language model for time-stamped text data which tracks the semantic evolution of individual words over time. The model represents words and contexts by latent trajectories in an embedding space. At each moment in time, the embedding vectors are inferred from a probabilistic version of word2vec (Mikolov et al., 2013b). These embedding vectors are connected in time through a latent diffusion process. We describe two scalable variational inference algorithms—skipgram smoothing and skip-gram filtering—that allow us to train the model jointly over all times; thus learning on all data while simultaneously allowing word and context vectors to drift. Experimental results on three different corpora demonstrate that our dynamic model infers word embedding trajectories that are more interpretable and lead to higher predictive likelihoods than competing methods that are based on static models trained separately on time slices.