Scalable Music: Automatic Music Retargeting and Synthesis

In this paper we propose a method for dynamic rescaling of music, inspired by recent works on image retargeting, video reshuffling and character animation in the computer graphics community.

May 6, 2013

Eurographics 2013

Authors

Simon Wenner (ETH Zurich)

Jean-Charles Bazin (ETH Zurich)

Alexander Sorkine-Hornung (Disney Research)

Changil Kim (Disney Research/ETH Joint PhD)

Markus Gross (Disney Research/ETH Zurich)

Scalable Music: Automatic Music Retargeting and Synthesis

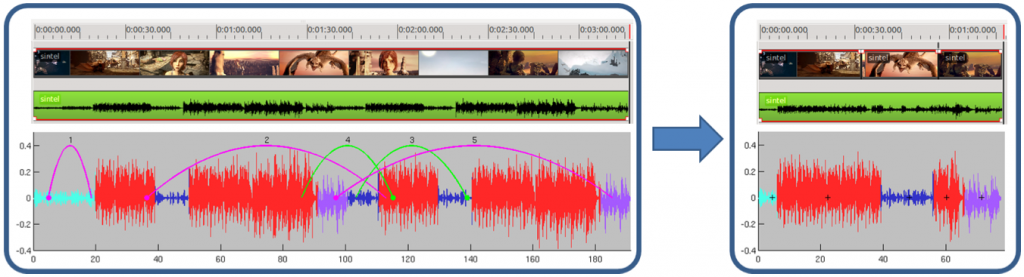

Given the desired target length of a piece of music and optional additional constraints such as position and importance of certain parts, we build on concepts from seam carving, video textures, and motion graphs and extend them to allow for a global optimization of jumps in an audio signal. Based on an automatic feature extraction and spectral clustering for segmentation, we employ length-constrained least-costly path search via dynamic programming to synthesize a novel piece of music that best fulfills all desired constraints, with imperceptible transitions between reshuffled parts. We show various applications of music retargeting such as part removal, decreasing or increasing music duration, and in particular consistent joint video and audio editing.