VideoSnapping: Interactive Synchronization of Multiple Videos

We present an interactive method for computing optimal nonlinear temporal video alignments of an arbitrary number of videos.

July 27, 2014

ACM SIGGRAPH 2014

Authors

Oliver Wang (Disney Research)

Christopher Schroers (Disney Research)

Henning Zimmer (Disney Research)

Markus Gross (Disney Research/ETH Zurich)

Alexander Sorkine-Hornung (Disney Research)

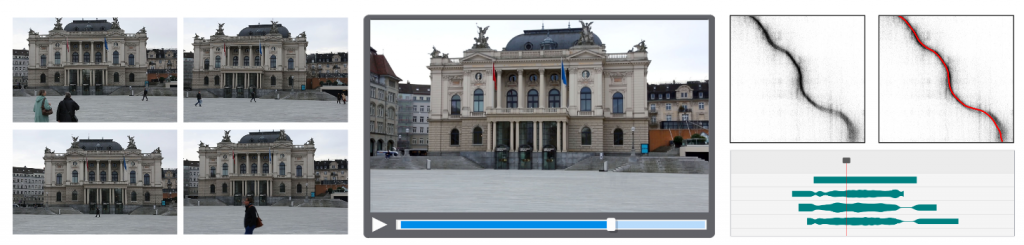

VideoSnapping: Interactive Synchronization of Multiple Videos

Aligning video is a fundamental task in computer graphics and vision, required for a wide range of applications. We present an interactive method for computing optimal nonlinear temporal video alignments of an arbitrary number of videos. We first derive a robust approximation of alignment quality between pairs of clips, computed as a weighted histogram of feature matches. We then find optimal temporal mappings (constituting frame correspondences) using a graph-based approach that allows for very efficient evaluation with artist constraints. This enables an enhancement to the “snapping” interface in video editing tools, where videos in a timeline are now able snap to one another when dragged by an artist based on their content, rather than simply start-and-end times. The pairwise snapping is then generalized to multiple clips, achieving a globally optimal temporal synchronization that automatically arranges a series of clips filmed at different times into a single consistent time frame. When followed by a simple spatial registration, we achieve high-quality spatiotemporal video alignments at a fraction of the computational complexity compared to previous methods. Assisted temporal alignment is a degree of freedom that has been largely unexplored, but is an important task in video editing. Our approach is simple to implement, highly efficient, and very robust to differences in video content, allowing for interactive exploration of the temporal alignment space for multiple real world HD videos.