Facial Performance Enhancement Using Dynamic Shape Space Analysis

We present a technique for adding fine-scale details and expressiveness to low-resolution art-directed facial performances, such as those created manually using a rig, via marker-based capture, by fitting a morphable model to a video, or through Kinect reconstruction using recent faceshift technology.

March 1, 2014

ACM Transactions on Graphics (TOG) 2014

Authors

Amit Bermano (Disney Research/ETH Joint PhD)

Derek Bradley (Disney Research)

Thabo Beeler (Disney Research)

Fabio Zund (Disney Research/ETH Joint PhD)

Derek Nowrouzezahrai (Disney Research)

Ilya Baran (Disney Research)

Olga Sorkine-Hornung (ETH Zurich)

Hanspeter Pfister (Disney Research/Harvard University)

Robert W. Sumner (Disney Research)

Bernd Bickel (Disney Research)

Markus Gross (Disney Research/ETH Zurich)

Facial Performance Enhancement Using Dynamic Shape Space Analysis

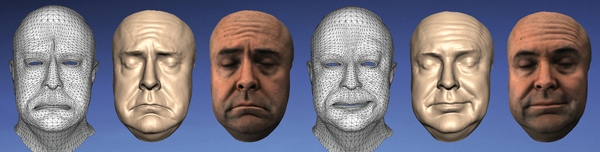

The facial performance of an individual is inherently rich in subtle deformation and timing details. Although these subtleties make the performance realistic and compelling, they often elude both motion capture and hand animation. We present a technique for adding fine-scale details and expressiveness to low-resolution art-directed facial performances, such as those created manually using a rig, via marker-based capture, by fitting a morphable model to a video, or through Kinect reconstruction using recent faceshift technology. We employ a high-resolution facial performance capture system to acquire a representative performance of an individual in which he or she explores the full range of facial expressions. From the captured data, our system extracts an expressiveness model that encodes subtle spatial and temporal deformation details specific to that particular individual. Once this model has been built, these details can be transferred to low-resolution art-directed performances. We demonstrate results on various forms of input; after our enhancement, the resulting animations exhibit the same nuances and fine spatial details as the captured performance, with optional temporal enhancement to match the dynamics of the actor. Finally, we show experimentally that our technique compares favorably to the current state-of-the-art in example-based facial animation.