Coordinated Multi-Agent Imitation Learning

We propose a joint approach that simultaneously learns a latent coordination model along with the individual policies.

August 6, 2017

International Conference on Machine Learning (ICML) 2017

Authors

Hoang Le (California Institute of Technology)

Yisong Yue (California Institute of Technology)

Peter Carr (Disney Research)

Patrick Lucey (STATS LLC)

Coordinated Multi-Agent Imitation Learning

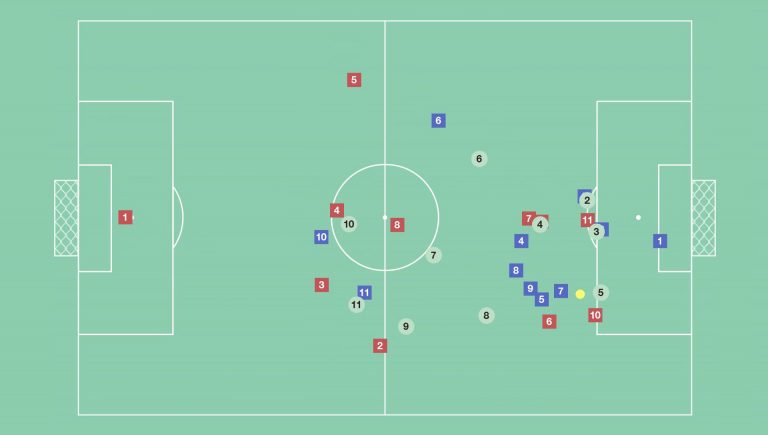

We study the problem of imitation learning from demonstrations of multiple coordinating agents. One key challenge in this setting is that learning a good model of coordination can be difficult since coordination is often implicit in the demonstrations and must be inferred as a latent variable. We propose a joint approach that simultaneously learns a latent coordination model along with the individual policies. In particular, our method integrates unsupervised structure learning with conventional imitation learning. We illustrate the power of our approach on a difficult problem of learning multiple policies for fine-grained behavior modeling in team sports, where different players occupy different roles in the coordinated team strategy. We show that having a coordination model to infer the roles of players yields substantially improved imitation loss compared to conventional baselines.