Augmented Reality Dialog Interface for Multimodal Teleoperation

We designed an augmented reality interface for dialog that enables the control of multimodal behaviors in telepresence robot applications.

August 28, 2017

International Symposium on Robot and Human Interactive Communication (RO-MAN) 2017

Authors

Andre Pereira (Disney Research)

Elizabeth J. Carter (Disney Research)

Iolanda Leite (Disney Research)

John Mars (Disney Research)

Jill Lehmann (Disney Research)

Augmented Reality Dialog Interface for Multimodal Teleoperation

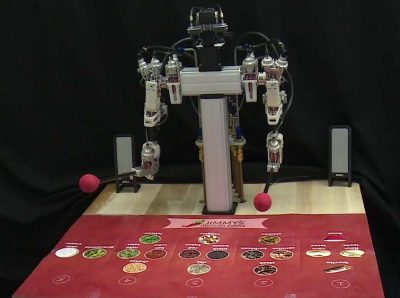

We designed an augmented reality interface for dialog that enables the control of multimodal behaviors in telepresence robot applications. This interface, when paired with a telepresence robot, enables a single operator to accurately control and coordinate the robot’s verbal and nonverbal behaviors. Depending on the complexity of the desired interaction, however, some applications might benefit from having multiple operators control different interaction modalities. As such, our interface can be used by either a single operator or pair of operators. In the paired-operator system, one operator controls verbal behaviors while the other controls nonverbal behaviors. A within-subjects user study was conducted to assess the usefulness and validity of our interface in both single and paired-operator setups. When faced with hard tasks, coordination between verbal and nonverbal behavior improves in the single-operator condition. Despite single operators being slower to produce verbal responses, verbal error rates were unaffected by our conditions. Finally, significantly improved presence measures such as mental immersion, sensory engagement, ability to view and understand the dialog partner, and degree of emotion occur for single operators that control both the verbal and nonverbal behaviors of the robot.