Wall++: Room-Scale Interactive and Context-Aware Sensing

We present Wall++, a low-cost sensing approach that allows walls to become a smart infrastructure.

April 21, 2018

ACM Conference on Human Factors in Computing Systems (CHI) 2018

Authors

Yang Zhang (Disney Research/Carnegie Mellon University)

Chouchang (Jack) Yang (Disney Research)

Scott Hudson (Disney Research/Carnegie Mellon University)

Chris Harrison (Disney Research/Carnegie Mellon University)

Alanson Sample (Disney Research)

Wall++: Room-Scale Interactive and Context-Aware Sensing

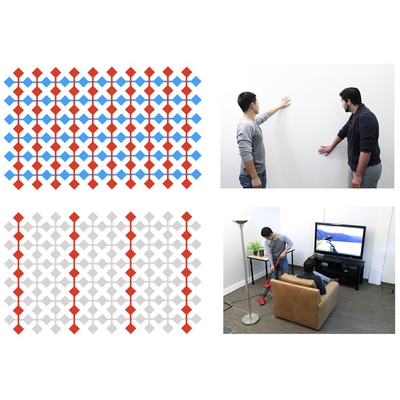

Human environments are typified by walls – homes, offices, schools, museums, hospitals and pretty much every indoor context one can imagine has walls. In many cases, they make up a majority of readily accessible indoor surface area, and yet they are static – their primary function is to be a wall, separating spaces and hiding infrastructure. We present Wall++, a low-cost sensing approach that allows walls to become a smart infrastructure. Instead of merely separating spaces, walls can now enhance rooms with sensing and interactivity. Our wall treatment and sensing hardware can track users’ touch and gestures, as well as estimate body pose if they are close. By capturing airborne electromagnetic noise, we can also detect what appliances are active and where they are located. Through a series of evaluations, we demonstrate Wall++ can enable robust room-scale interactive and context-aware applications.