Semantic Deep Face Models

We present a method for nonlinear 3D face modeling using neural architectures.

November 25, 2020

3D International Conference on 3D Vision (3DV) (2020)

Authors

Prashanth Chandran (DisneyResearch|Studios/ETH Joint PhD)

Derek Bradley (DisneyResearch|Studios)

Markus Gross (DisneyResearch|Studios/ETH Zurich)

Thabo Beeler (DisneyResearch|Studios)

Semantic Deep Face Models

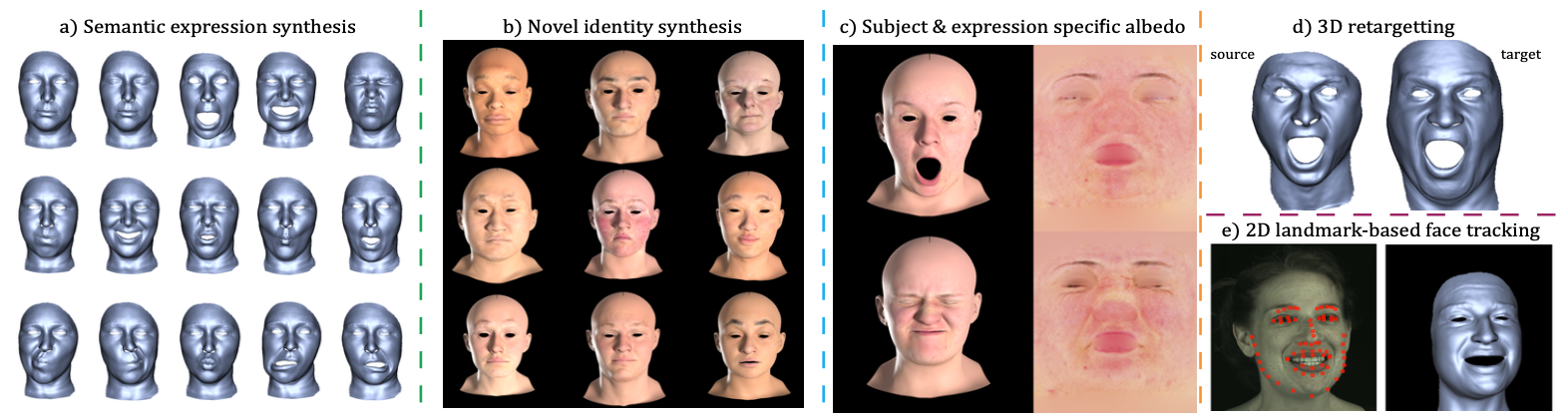

Face models built from 3D face databases are often used in computer vision and graphics tasks such as face reconstruction, replacement, tracking and manipulation. For such tasks, commonly used multi-linear morphable models, which provide semantic control over facial identity and expression, often lack quality and expressivity due to their linear nature. Deep neural networks offer the possibility of non-linear face modeling, where so far most research has focused on generating realistic facial images with less focus on 3D geometry, and methods that do produce geometry have little or no notion of semantic control, thereby limiting their artistic applicability. We present a method for nonlinear 3D face modeling using neural architectures that provides intuitive semantic control over both identity and expression by disentangling these dimensions from each other, essentially combining the benefits of both multi-linear face models and nonlinear deep face networks. The result is a powerful, semantically controllable, nonlinear, parametric face model. We demonstrate the value of our semantic deep face model with applications of 3D face synthesis, facial performance transfer, performance editing, and 2D landmark-based performance retargeting.