Lossy Image Compression with Normalizing Flows

We propose a deep image compression method that is similarly able to go from low bit-rates to near lossless quality, byleveraging normalizing flows to learn a bijective mapping from the image space toa latent representation.

May 8, 2021

Neural Compression Workshop @ ICLR (2021)

Authors

Leonhard Helminger (DisneyResearch|Studios/ETH Joint PhD)

Abdelaziz Djelouah (DisneyResearch|Studios)

Markus Gross (DisneyResearch|Studios/ETH Zurich)

Christopher Schroers (DisneyResearch|Studios)

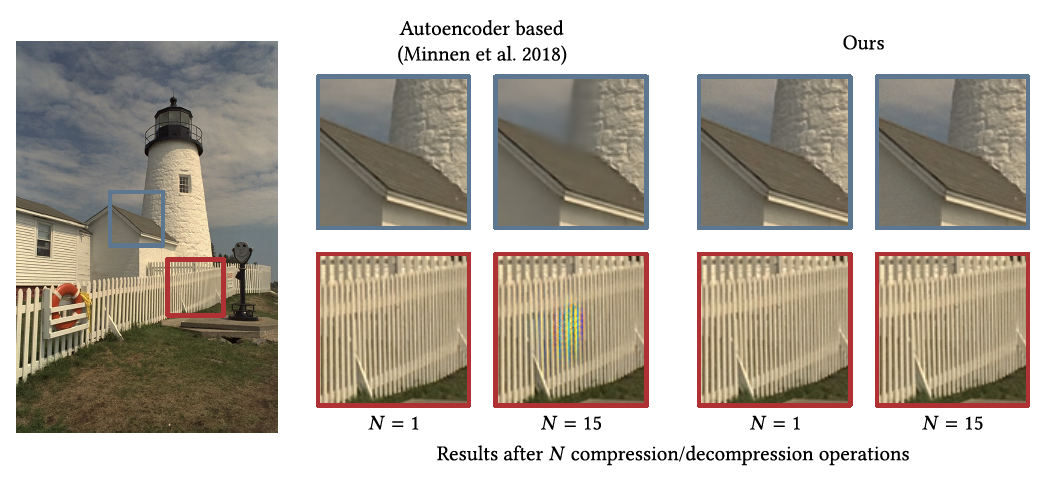

Deep learning based image compression has recently witnessed exciting progress and in some cases even managed to surpass transform coding based approaches.However, state-of-the-art solutions for deep image compression typically employ autoencoders which map the input to a lower dimensional latent space and thus ir-reversibly discard information already before quantization. In contrast, traditional approaches in image compression employ an invertible transformation before per-forming the quantization step. In this work, we propose a deep image compression method that is similarly able to go from low bit-rates to near lossless quality, by leveraging normalizing flows to learn a bijective mapping from the image space to a latent representation. We demonstrate further advantages unique to our solution,such as the ability to maintain constant quality results through re-encoding, even when performed multiple times. To the best of our knowledge, this is the firstwork leveraging normalizing flows for lossy image compression.