An Implicit Physical Face Model Driven by Expression and Style

We propose a new face model based on a data-driven implicit neural physics model that can be driven by both expression and style separately. At the core, we present a framework for learning implicit physics-based actuations for multiple subjects simultaneously, trained on a few arbitrary performance capture sequences from a small set of identities.

December 11, 2023

ACM SIGGRAPH Asia (2023)

Authors

Lingchen Yang (ETH Zurich)

Gaspard Zoss (DisneyResearch|Studios)

Prashanth Chandran (DisneyResearch|Studios)

Paulo Gotardo (DisneyResearch|Studios)

Markus Gross (DisneyResearch|Studios/ETH Zurich)

Barbara Solenthaler (ETH Zurich)

Eftychios Sifakis (University of Wisconsin)

Derek Bradley (DisneyResearch|Studios)

An Implicit Physical Face Model Driven by Expression and Style

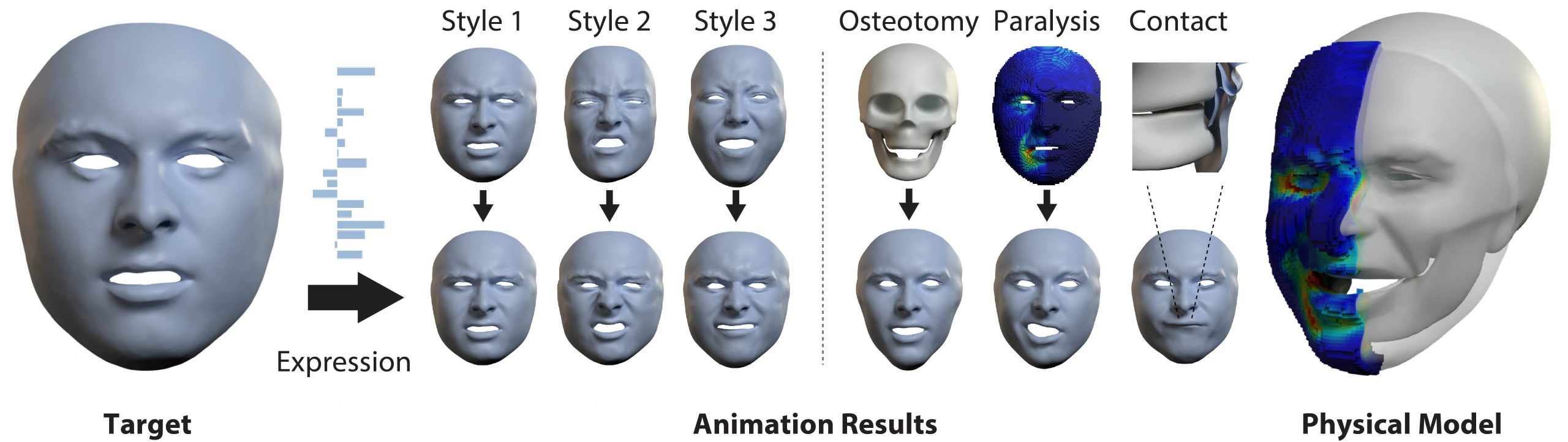

3D facial animation is often produced by manipulating facial deformation models (or rigs) that are traditionally parameterized by expression controls. A key component that is usually overlooked is expression “style”, as in, how a particular expression is performed. Although it is common to define a semantic basis of expressions that characters can perform, most characters perform each expression in their own style. To date, style is usually entangled with the expression, and it is not possible to transfer the style of one character to another when considering facial animation. We present a new face model, based on a data-driven implicit neural physics model, that can be driven by both expression and style separately. At the core, we present a framework for learning implicit physics-based actuations for multiple subjects simultaneously, trained on a few arbitrary performance capture sequences from a small set of identities. Once trained, our method allows generalized physics-based facial animation for any of the trained identities, extending to unseen performances. Furthermore, it grants control over the animation style, enabling style transfer from one character to another or blending styles of different characters. Lastly, as a physics-based model, it is capable of synthesizing physical effects, such as collision handling, setting our method apart from conventional approaches.