QUADify: Extracting Meshes with Pixel-level Details and Materials from Images

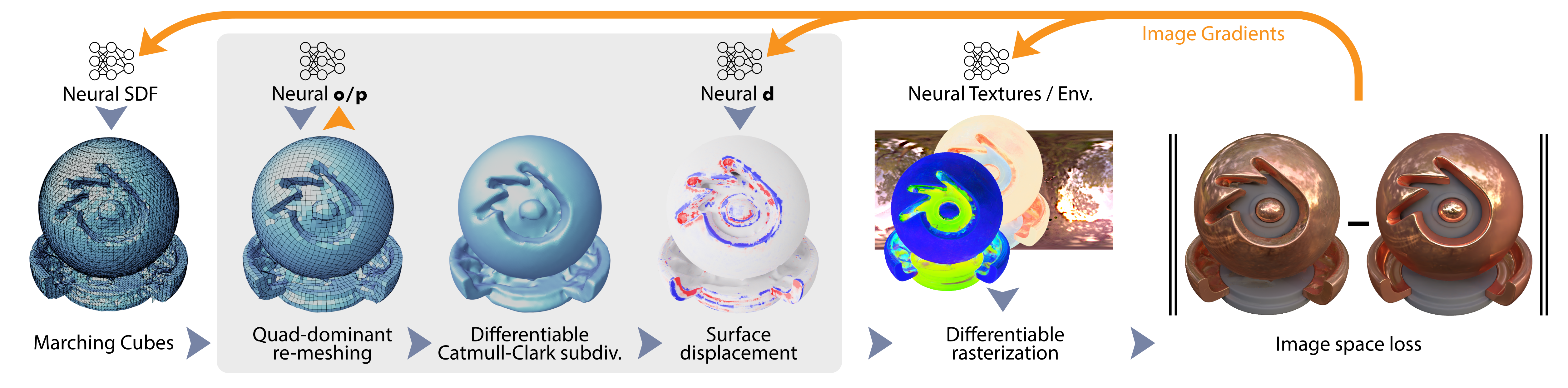

In this work, we propose a method to extract regular quad-dominant meshes from posed images. More specifically, we generate a high-quality 3D model through de- composition into an easily editable quad-dominant mesh with pixel-level details such as displacement, materials, and lighting.

June 17, 2024

CVPR (2024)

Authors

Maximilian Frühauf (DisneyResearch|Studios/ETH Zurich)

Hayko Riemenschneider (DisneyResearch|Studios)

Markus Gross (DisneyResearch|Studios/ETH Zurich)

Christopher Schroers (DisneyResearch|Studios)

QUADify: Extracting Meshes with Pixel-level Details and Materials from Images

Despite exciting progress in automatic 3D reconstruction from images, excessive and irregular triangular faces in the resulting meshes still constitute a significant challenge when it comes to adoption in practical artist work- flows. Therefore, we propose a method to extract regular quad-dominant meshes from posed images. More specifically, we generate a high-quality 3D model through de- composition into an easily editable quad-dominant mesh with pixel-level details such as displacement, materials, and lighting. To enable end-to-end learning of shape and quad topology, we QUADify a neural implicit representation using our novel differentiable re-meshing objective. Distinct from previous work, our method exploits artifact-free Catmull-Clark subdivision combined with vertex displacement to extract pixel-level details linked to the base geometry. Finally, we apply differentiable rendering techniques for material and lighting decomposition to optimize for image reconstruction. Our experiments show the benefits of end-to-end re-meshing and that our method yields state- of-the-art geometric accuracy while providing lightweight meshes with displacements and textures that are directly compatible with professional renderers and game engines.