Scalable Structure from Motion for Densely Sampled Videos

The key insight behind this paper is to effectively exploit coherence in densely sampled video input.

June 8, 2015

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2015

Authors

Benjamin Resch (Disney Research/Tübingen University)

Hendrik Lensch (Tübingen University))

Oliver Wang (Disney Research)

Marc Pollefeys (ETH Zurich)

Alexander Sorkine-Hornung (Disney Research)

Scalable Structure from Motion for Densely Sampled Videos

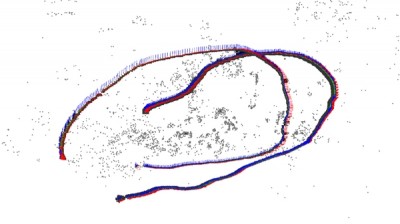

Videos consisting of thousands of high-resolution frames are challenging for existing structure from motion (SfM) and simultaneous-localization and mapping (SLAM) techniques. We present a new approach for simultaneously computing extrinsic camera poses and 3D scene structure that is capable of handling such large volumes of image data. The key insight behind this paper is to effectively exploit coherence in densely sampled video input. Our technical contributions include robust tracking and selection of confident video frames, a novel window bundle adjustment, frame-to-structure verification for globally consistent reconstructions with multi-loop closing, and utilizing efficient global linear camera pose estimation in order to link both consecutive and distant bundle adjustment windows. To our knowledge, we describe the first system that is capable of handling high-resolution, high frame-rate video data with close to realtime performance. In addition, our approach can robustly integrate data from different video sequences, allowing multiple video streams to be simultaneously calibrated in an efficient and globally optimal way. We demonstrate high-quality alignment on a large scale challenging datasets, e.g., 2-20 megapixel resolution at frame rates of 25-120 Hz with thousands of frames.