Enriching Facial Blendshape Rigs with Physical Simulation

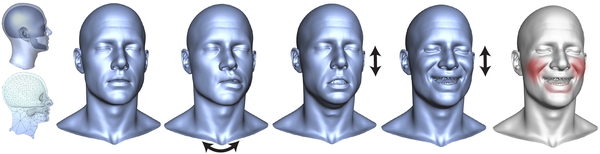

We propose the concept of blendmaterials to give artists an intuitive means to account for changing material properties due to muscle activation.

April 24, 2017

Eurographics 2017

Authors

Yeara Kozlov (Disney Research/ETH Joint PhD)

Derek Bradley (Disney Research)

Moritz Baecher (Disney Research)

Bernhard Thomaszewski (Disney Research)

Thabo Beeler (Disney Research)

Markus Gross (Disney Research/ETH Zurich)

Enriching Facial Blendshape Rigs with Physical Simulation

Oftentimes facial animation is created separately from overall body motion. Since convincing facial animation is challenging enough in itself, artists tend to create and edit the face motion in isolation. Or if the face animation is derived from motion capture, this is typically performed in a mo-cap booth while sitting relatively still. In either case, recombining the isolated face animation with body and head motion is non-trivial and often results in an uncanny result if the body dynamics are not properly reflected on the face (e.g. the bouncing of facial tissue when running). We tackle this problem by introducing a simple and intuitive system that allows to add physics to facial blendshape animation. Unlike previous methods that try to add physics to face rigs, our method preserves the original facial animation as closely as possible. To this end, we present a novel simulation framework that uses the original animation as per-frame rest-poses without adding spurious forces. As a result, in the absence of any external forces or rigid head motion, the facial performance will exactly match the artist-created blendshape animation. In addition, we propose the concept of blendmaterials to give artists an intuitive means to account for changing material properties due to muscle activation. This system allows to automatically combine facial animation and head motion such that they are consistent while preserving the original animation as closely as possible. The system is easy to use and readily integrates with existing animation pipelines.