Fast and Stable Color Balancing for Images and Augmented Reality

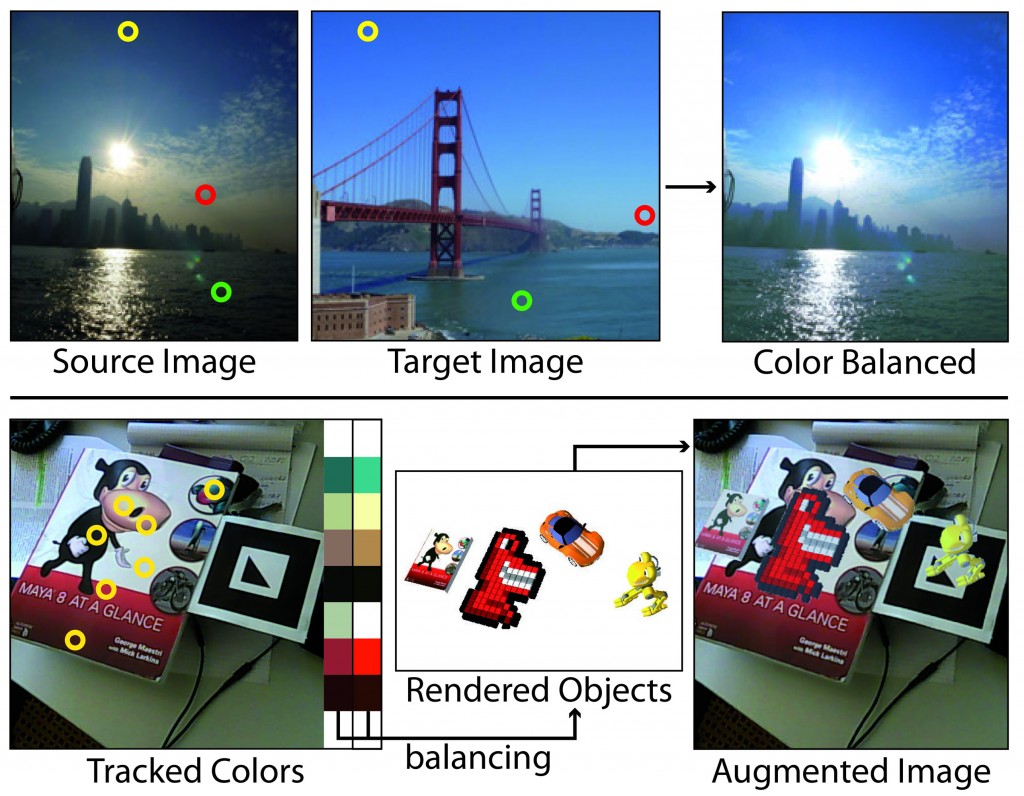

This paper addresses the problem of globally balancing colors between images. The input to our algorithm is a sparse set of desired color correspondences between a source and a target image.

October 13, 2012

3D International Conference on 3D Vision (3DV) 2012

Authors

Thomas Oskam (Disney Research/ETH Joint PhD)

Alexander Sorkine-Hornung (Disney Research)

Robert W. Sumner (Disney Research)

Markus Gross (Disney Research/ETH Zurich)

Fast and Stable Color Balancing for Images and Augmented Reality

The global color space transformation problem is then solved by computing a smooth vector field in CIE Lab color space that maps the gamut of the source to that of the target. We employ normalized radial basis functions for which we compute optimized shape parameters based on the input images, allowing for more faithful and flexible color matching compared to existing RBF-, regression- or histogram-based techniques. Furthermore, we show how the basic per-image matching can be efficiently and robustly extended to the temporal domain using RANSAC-based correspondence classification. Besides interactive color balancing for images, these properties render our method extremely useful for automatic, consistent embedding of synthetic graphics in video, as required by applications such as augmented reality.