Rigid Stabilization of Facial Expressions

In this project, we develop the first automatic face stabilization method that achieves professional-quality results on large sets of facial expressions.

July 27, 2014

ACM SIGGRAPH 2014

Authors

Thabo Beeler (Disney Research)

Derek Bradley (Disney Research)

Rigid Stabilization of Facial Expressions

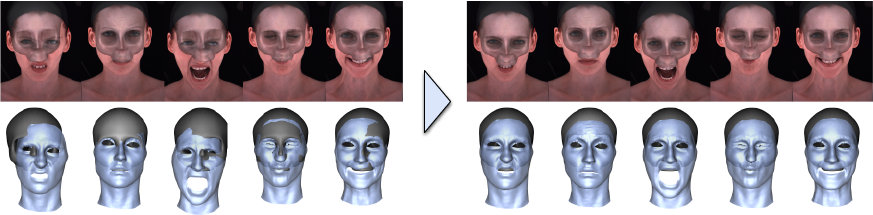

Facial scanning has become the industry-standard approach for creating digital doubles in movies and video games. This process involves capturing an actor while they perform different expressions that span their range of facial motion. Unfortunately, the scans typically contain a superposition of the desired expression on top of un-wanted rigid head movement. In order to extract true expression deformations, it is essential to factor out the rigid head movement for each expression, a process referred to as rigid stabilization. In order to achieve production-quality in industry, face stabilization is usually performed through a tedious and error-prone manual process.

In this project, we develop the first automatic face stabilization method that achieves professional-quality results on large sets of facial expressions. Because human faces can undergo a wide range of deformation, there is not a single point on the skin surface that moves rigidly with the underlying skull. Consequently, computing the rigid transformation from direct observation, a common approach in previous methods, is error prone and leads to inaccurate results. Instead, we propose to indirectly stabilize the expressions by explicitly aligning them to an estimate of the underlying skull using anatomically motivated constraints. We show that the proposed method not only outperforms existing techniques but is also on par with manual stabilization, yet requires less than a second of computation time.