Live Texturing of Augmented Reality Characters from Colored Drawings

In this paper, we present an augmented reality coloring book App in which children color characters in a printed coloring book and inspect their work using a mobile device.

July 23, 2015

IEEE International Symposium on Mixed and Augmented Reality (ISMAR) 2015

Authors

Stephane Magnenat (Disney Research)

Dat Tien Ngo (EPF Lausanne)

Fabio Zünd (Disney Research/ETH Joint PhD)

Mattia Ryffel (Disney Research)

Chino Noris (Disney Research)

Gerhard Röthlin (Disney Research)

Alessia Marra (Disney Research)

Maurizio Nitti (Disney Research)

Pascal Fua (EPF Lausanne)

Markus Gross (Disney Research/ETH Zurich)

Robert W. Sumner (Disney Research)

Live Texturing of Augmented Reality Characters from Colored Drawings

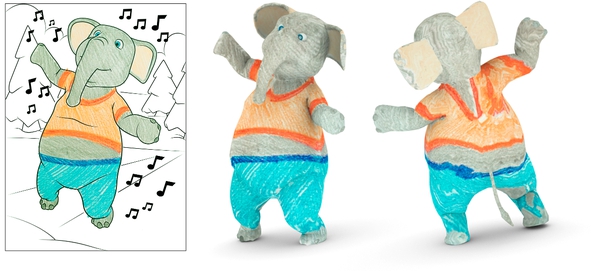

Coloring books capture the imagination of children and provide them with one of their earliest opportunities for creative expression. However, given the proliferation and popularity of digital devices, real-world activities like coloring can seem unexciting, and children become less engaged in them. Augmented reality holds unique potential to impact this situation by providing a bridge between real-world activities and digital enhancements. In this paper, we present an augmented reality coloring book App in which children color characters in a printed coloring book and inspect their work using a mobile device. The drawing is detected and tracked, and the video stream is augmented with an animated 3-D version of the character that is textured according to the child’s coloring. This is possible thanks to several novel technical contributions. We present a texturing process that applies the captured texture from a 2-D colored drawing to both the visible and occluded regions of a 3-D character in real time. We develop a deformable surface tracking method designed for colored drawings that uses a new outlier rejection algorithm for real-time tracking and surface deformation recovery. We present a content creation pipeline to efficiently create the 2-D and 3-D content. And, finally, we validate our work with two user studies that examine the quality of our texturing algorithm and the overall App experience.