Imitating Human Movement with Teleoperated Robotic Head

We develop a controller for realizing smooth and accurate motion of a robotic head with application to a teleoperation system for the Furhat robot head.

August 26, 2016

International Symposium on Robot and Human Interactive Communication (RO-MAN) (2016)

Authors

Priyanshu Agarwal (Disney Research/University of Texas)

Samer Al Moubayed (Disney Research)

Alexander Alspach (Disney Research)

Joohyung Kim (Disney Research)

Elizabeth J. Carter (Disney Research)

Jill Fain Lehman (Disney Research)

Katsu Yamane (Disney Research)

Imitating Human Movement with Teleoperated Robotic Head

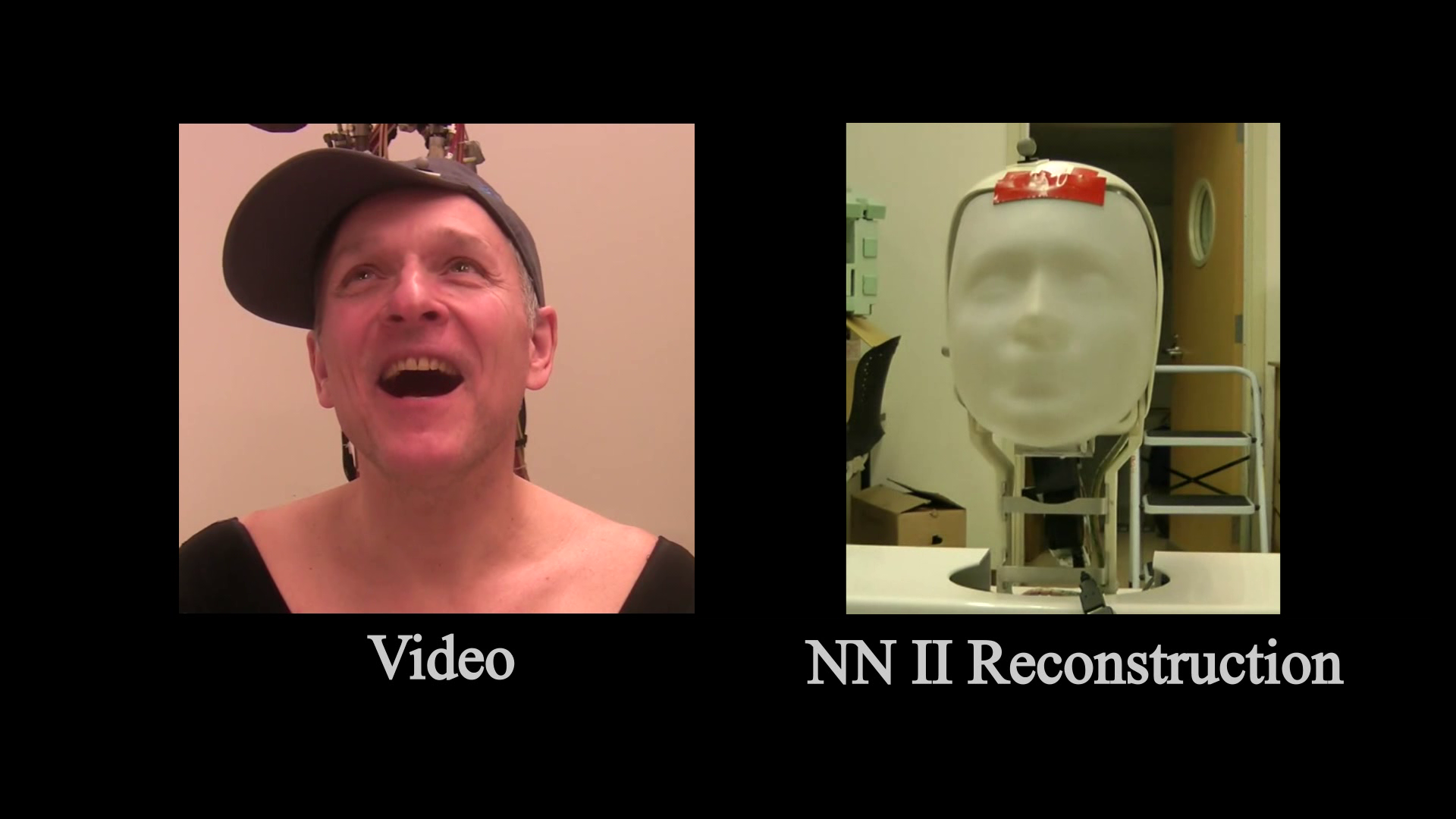

Effective teleoperation requires real-time control of a remote robotic system. In this work, we develop a controller for realizing smooth and accurate motion of a robotic head with application to a teleoperation system for the Furhat robot head, which we call TeleFurhat. The controller uses the head motion of an operator measured by a Microsoft Kinect 2 sensor as reference and applies a processing framework to condition and render the motion on the robot head. The processing framework includes a pre-filter based on a moving average filter, a neural network-based model for improving the accuracy of the raw pose measurements of Kinect, and a constrained-state Kalman filter that uses a minimum jerk model to smooth motion trajectories and limit the magnitude of changes in position, velocity, and acceleration. Our results demonstrate that the robot can reproduce the human head motion in real time with a latency of approximately 100 to 170 ms while operating within its physical limits. Furthermore, viewers prefer our new method over rendering the raw pose data from Kinect.