Real-time Rendering with Compressed Animated Light Fields

We propose an end-to-end solution for presenting movie quality animated graphics to the user while still allowing the sense of presence afforded by free viewpoint head motion.

May 16, 2017

Graphics Interface 2017

Authors

Charalampos Koniaris (Disney Research)

Maggie Kosek (Disney Research)

David Sinclair (Disney Research)

Kenny Mitchell (Disney Research)

Real-time Rendering with Compressed Animated Light Fields

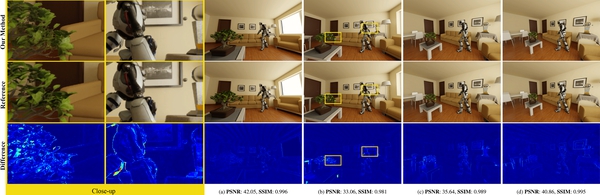

Compelling virtual reality experiences require high quality imagery as well as head motion with six degrees of freedom. Most existing systems limit the motion of the viewer, by using a prerecorded fixed position 360-degree video panoramas, or are limited in realism, by using video game quality graphics rendered in real-time on low powered devices. We propose a solution for presenting movie quality graphics to the user while still allowing the sense of presence afforded by free viewpoint head motion. By transforming offline rendered movie content into a novel immersive representation, we display the content in real-time according to the tracked head pose. For each frame, we generate a set of 360-degree images per frame (colors and depths) using a sparse set of of cameras placed in the vicinity of the potential viewer locations. We compress the colors and depths separately, using codecs tailored to the data. At runtime, we recover the visible video data using view-dependent decompression and render them using a raycasting algorithm that does on-the-fly scene reconstruction. Compression rates of 150:1 and greater are demonstrated with quantitative analysis of image reconstruction quality and performance.