One-Shot Metric Learning for Person Re-identification

The proposed one-shot learning achieves performance that is competitive with supervised methods but uses only a single example rather than the hundreds required for the fully supervised case.

July 22, 2017

IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2017

Authors

Slawomir Bak (Disney Research)

Peter Carr (Disney Research)

One-Shot Metric Learning for Person Re-identification

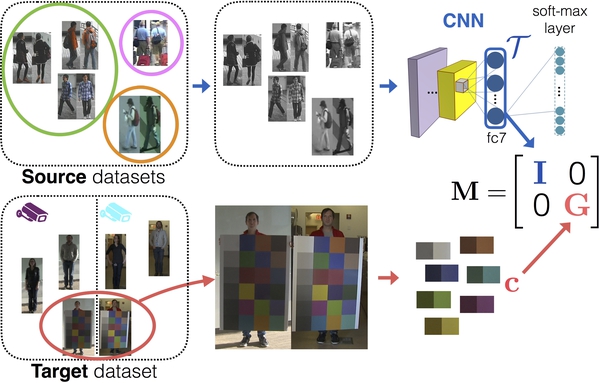

Re-identification of people in surveillance footage must cope with drastic variations in color, background, viewing angle and a person’s pose. Supervised techniques are often the most effective, but require extensive annotation which is infeasible for large camera networks. Unlike previous supervised learning approaches that require hundreds of annotated subjects, we learn a metric using a novel one-shot learning approach. We first learn a deep texture representation from intensity images with Convolutional Neural Networks (CNNs). When training a CNN using only intensity images, the learned embedding is color-invariant and shows high performance even on unseen datasets without fine-tuning. To account for differences in camera color distributions, we learn a color metric using a single pair of ColorChecker images. The proposed one-shot learning achieves performance that is competitive with supervised methods but uses only a single example rather than the hundreds required for the fully supervised case. Compared with semi-supervised and unsupervised state-of-theart methods, our approach yields significantly higher accuracy.