Deep Video Color Propagation

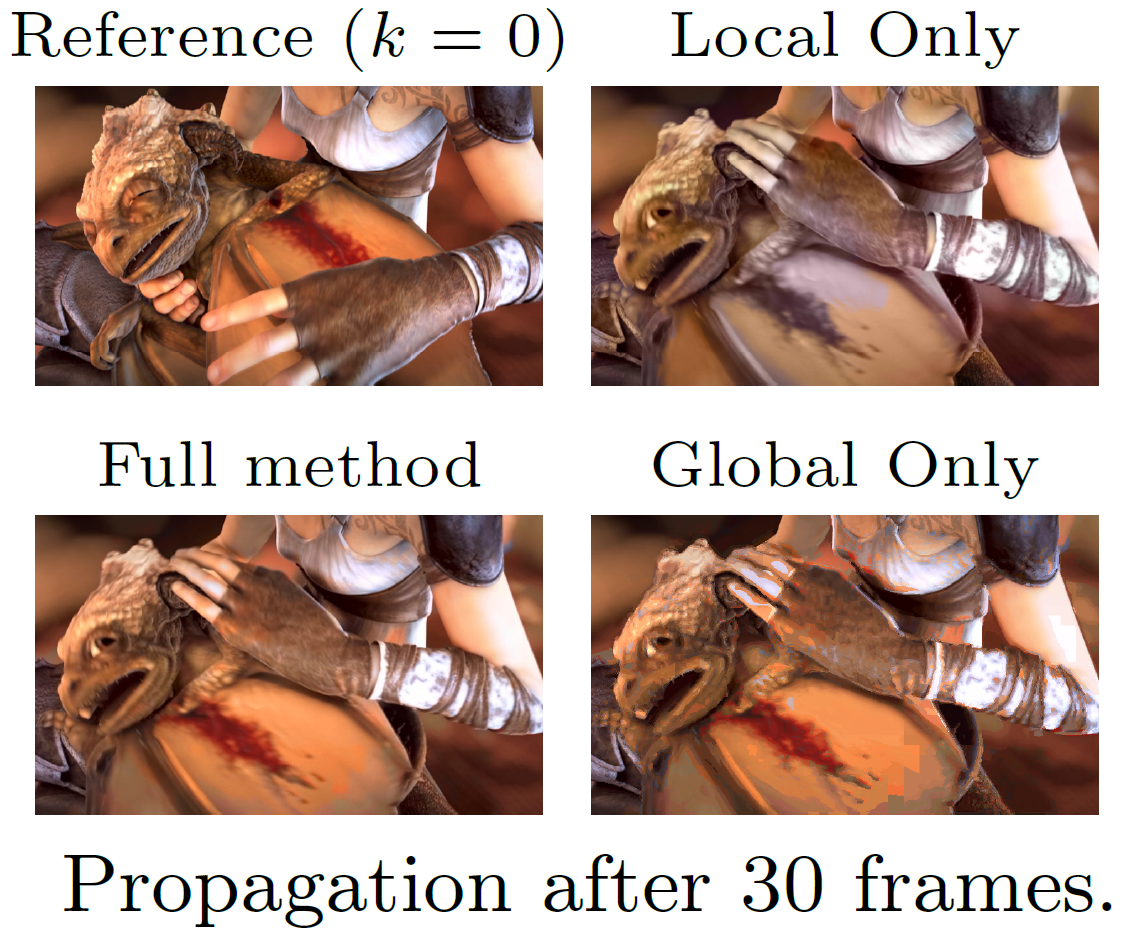

In this work we propose a deep learning framework for color propagation that combines a local strategy, to propagate colors frame-by-frame ensuring temporal stability, and a global strategy, using semantics for color propagation within a longer range.

September 4, 2018

British Machine Vision Conference 2018

Authors

Simone Schaub (Disney Research/ETH Joint PhD)

Victor Cornillère (Disney Research)

Aziz Djelouah (Disney Research)

Christopher Schroers (Disney Research)

Markus Gross (Disney Research/ETH Zurich)

Traditional approaches for color propagation in videos rely on some form of matching between consecutive video frames. Colors are

then propagated both spatially and temporally. These methods, however, are computationally expensive and do not take advantage of semantic information of the scene. In this work we propose a deep learning framework for color propagation that combines a local strategy, to propagate colors frame-by-frame ensuring temporal stability, and a global strategy, using semantics for color propagation within a longer range. Our evaluation shows the superiority of our strategy over existing video and image color propagation methods.