Learning Dynamic 3D Geometry and Texture for Video Face Swapping

We approach the problem of face swapping from the perspective of learning simultaneous convolutional facial autoencoders for the source and target identities, using a shared encoder network with identity-specific decoders.

October 05, 2022

Pacific Graphics (2022)

Authors

Christopher Otto (DisneyResearch|Studios/ETH Joint PhD)

Jacek Naruniec (DisneyResearch|Studios)

Leonhard Helminger (DisneyResearch|Studios/ETH Joint PhD)

Thomas Etterlin (DisneyResearch|Studios/ETH Joint M.Sc.)

Graziana Mignone (DisneyResearch|Studios)

Prashanth Chandran (DisneyResearch|Studios/ETH Joint PhD)

Gaspard Zoss (DisneyResearch|Studios)

Christopher Schroers (DisneyResearch|Studios)

Markus Gross (DisneyResearch|Studios/ETH)

Paulo Gotardo (DisneyResearch|Studios)

Derek Bradley (DisneyResearch|Studios)

Romann Weber (DisneyResearch|Studios)

Learning Dynamic 3D Geometry and Texture for Video Face Swapping

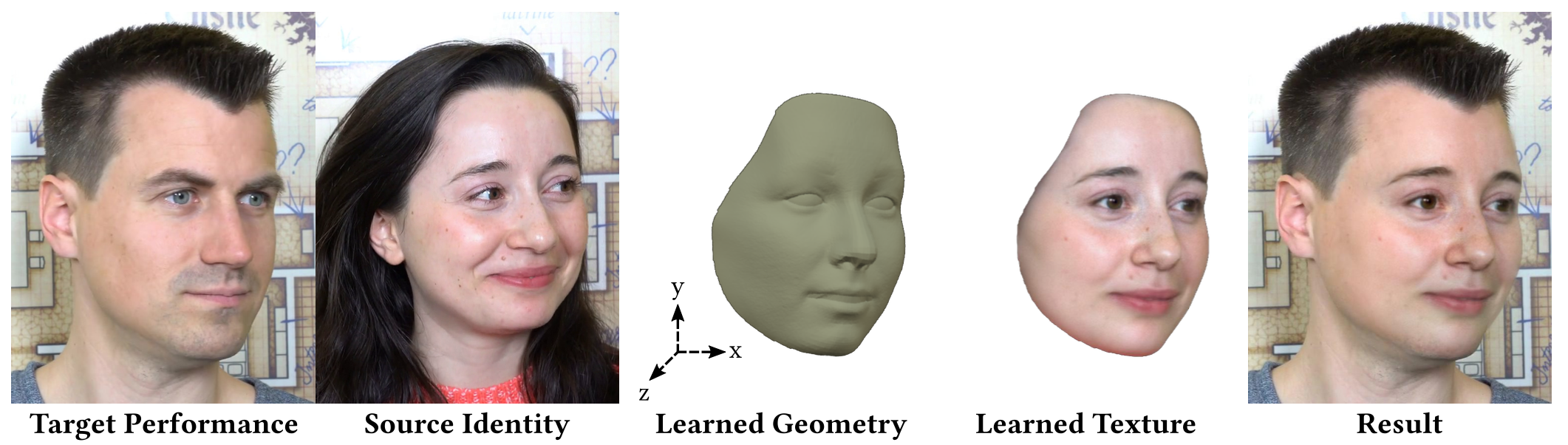

Face swapping is the process of applying a source actor’s appearance to a target actor’s performance in a video. This is a challenging visual effect that has seen increasing demand in film and television production. Recent work has shown that data-driven methods based on deep learning can produce compelling effects at production quality in a fraction of the time required for a traditional 3D pipeline. However, the dominant approach operates only on 2D imagery without reference to the underlying facial geometry or texture, resulting in poor generalization under novel viewpoints and little artistic control. Methods that do incorporate geometry rely on pre-learned facial priors that do not adapt well to particular geometric features of the source and target faces. We approach the problem of face swapping from the perspective of learning simultaneous convolutional facial autoencoders for the source and target identities, using a shared encoder network with identity-specific decoders. The key novelty in our approach is that each decoder first lifts the latent code into a 3D representation, comprising a dynamic face texture and a deformable 3D face shape, before projecting this 3D face back onto the input image using a differentiable renderer. The coupled autoencoders are trained only on videos of the source and target identities, without requiring 3D supervision. By leveraging the learned 3D geometry and texture, our method achieves face swapping with higher quality than when using off-the-shelf monocular 3D face reconstruction, and overall lower FID score than state-of-the-art 2D methods. Furthermore, our 3D representation allows for efficient artistic control over the result, which can be hard to achieve with existing 2D approaches.