ReBaIR: Reference-Based Image Restoration

In this work, we propose a novel and generic reference-based restoration method that is applicable to any model and any task. We start with the observation that restoration models typically operate in feature space before a final decoding step which transforms the extracted features into an image.

September 29, 2025

AIM: Advances in Image Manipulation Workshop and Challenges (2025)

Authors

Michael Bernasconi (ETH Zurich/DisneyResearch|Studios)

Abdelaziz Djelouah (DisneyResearch|Studios)

Yang Zhang (DisneyResearch|Studios)

Markus Gross (DisneyResearch|Studios/ETH Zurich)

Christopher Schroers (DisneyResearch|Studios)

ReBaIR: Reference-Based Image Restoration

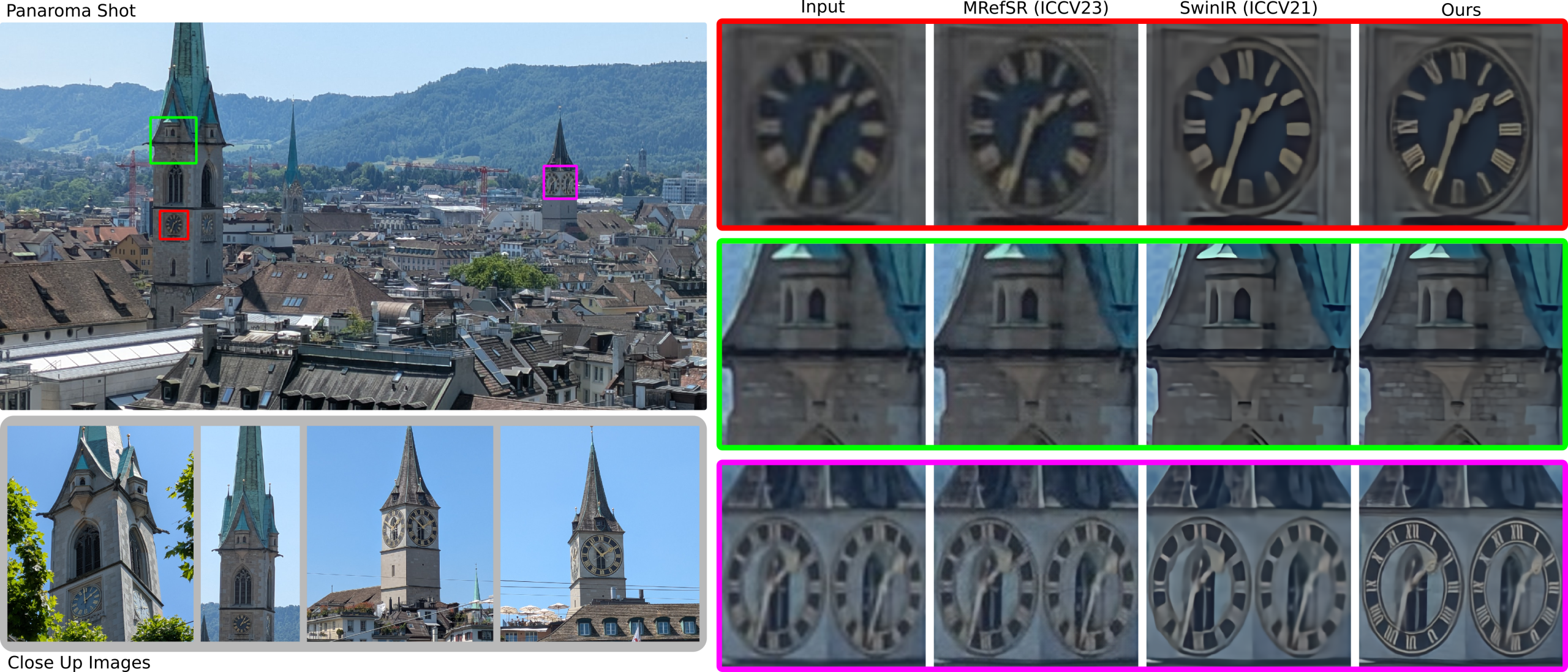

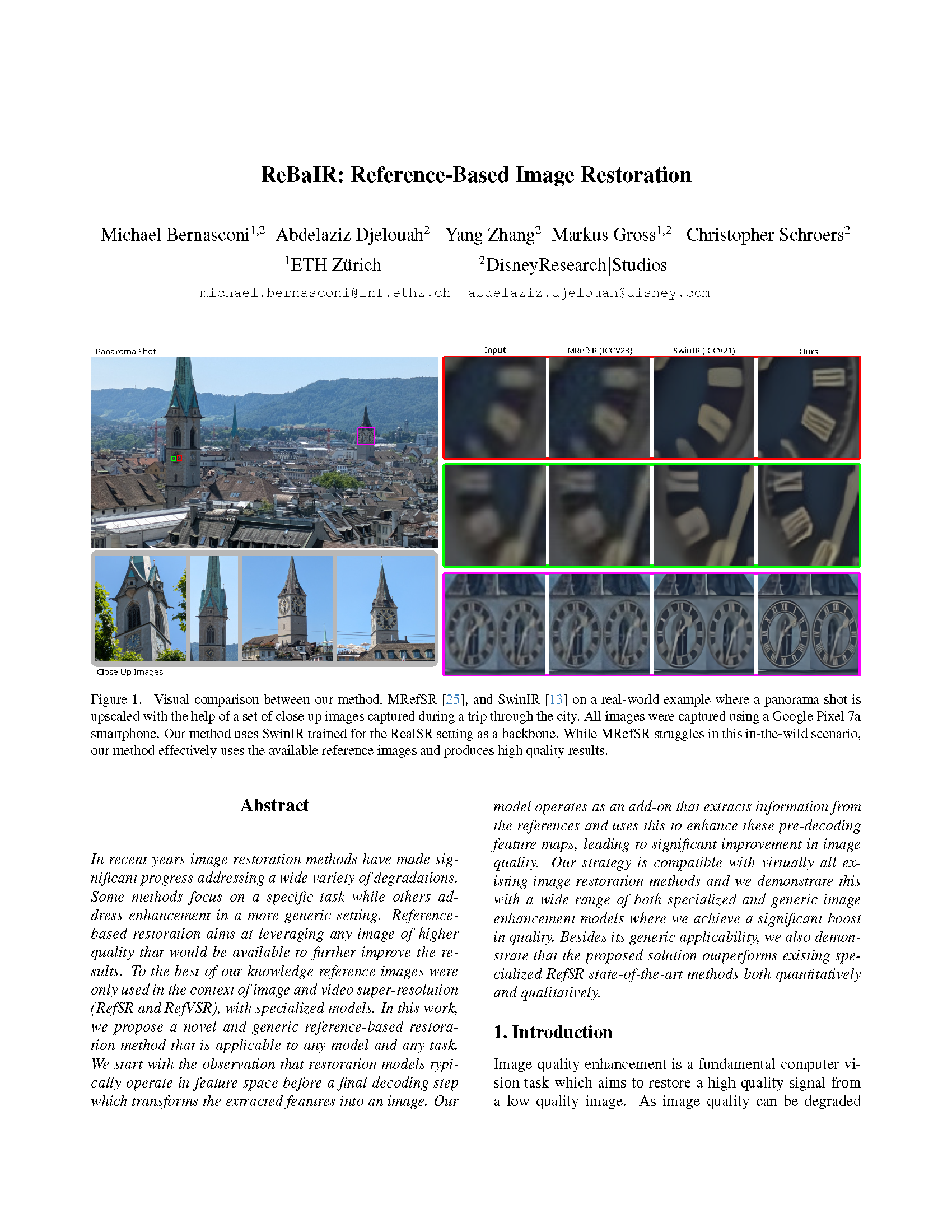

In recent years image restoration methods have made significant progress addressing a wide variety of degradations. Some methods focus on a specific task while others address enhancement in a more generic setting. Reference- based restoration aims at leveraging any image of higher quality that would be available to further improve the results. To the best of our knowledge reference images were only used in the context of image and video super-resolution (RefSR and RefVSR), with specialized models. In this work, we propose a novel and generic reference-based restoration method that is applicable to any model and any task. We start with the observation that restoration models typically operate in feature space before a final decoding step which transforms the extracted features into an image. Our model operates as an add-on that extracts information from the references and uses this to enhance these pre-decoding feature maps, leading to significant improvement in image quality. Our strategy is compatible with virtually all existing image restoration methods and we demonstrate this with a wide range of both specialized and generic image enhancement models where we achieve a significant boost in quality. Besides its generic applicability, we also demonstrate that the proposed solution outperforms existing specialized RefSR state-of-the-art methods both quantitatively and qualitatively.