LDIP: Long Distance Information Propagation for Video Super-Resolution

In this work, we propose a strategy for long distance information propagation with a flexible fusion module that can optionally also assimilate information from additional high resolution reference images.

October 16, 2025

International Conference on Computer Vision (ICCV) (2025)

Authors

Michael Bernasconi (ETH Zurich/DisneyResearch|Studios)

Abdelaziz Djelouah (DisneyResearch|Studios)

Yang Zhang (DisneyResearch|Studios)

Markus Gross (DisneyResearch|Studios/ETH Zurich)

Christopher Schroers (DisneyResearch|Studios)

LDIP: Long Distance Information Propagation for Video Super-Resolution

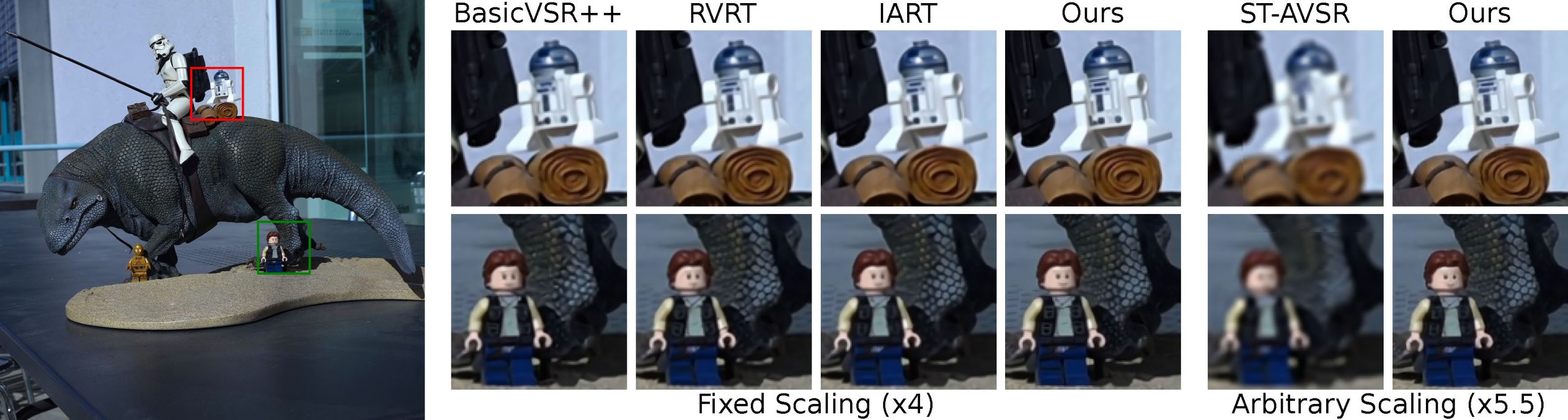

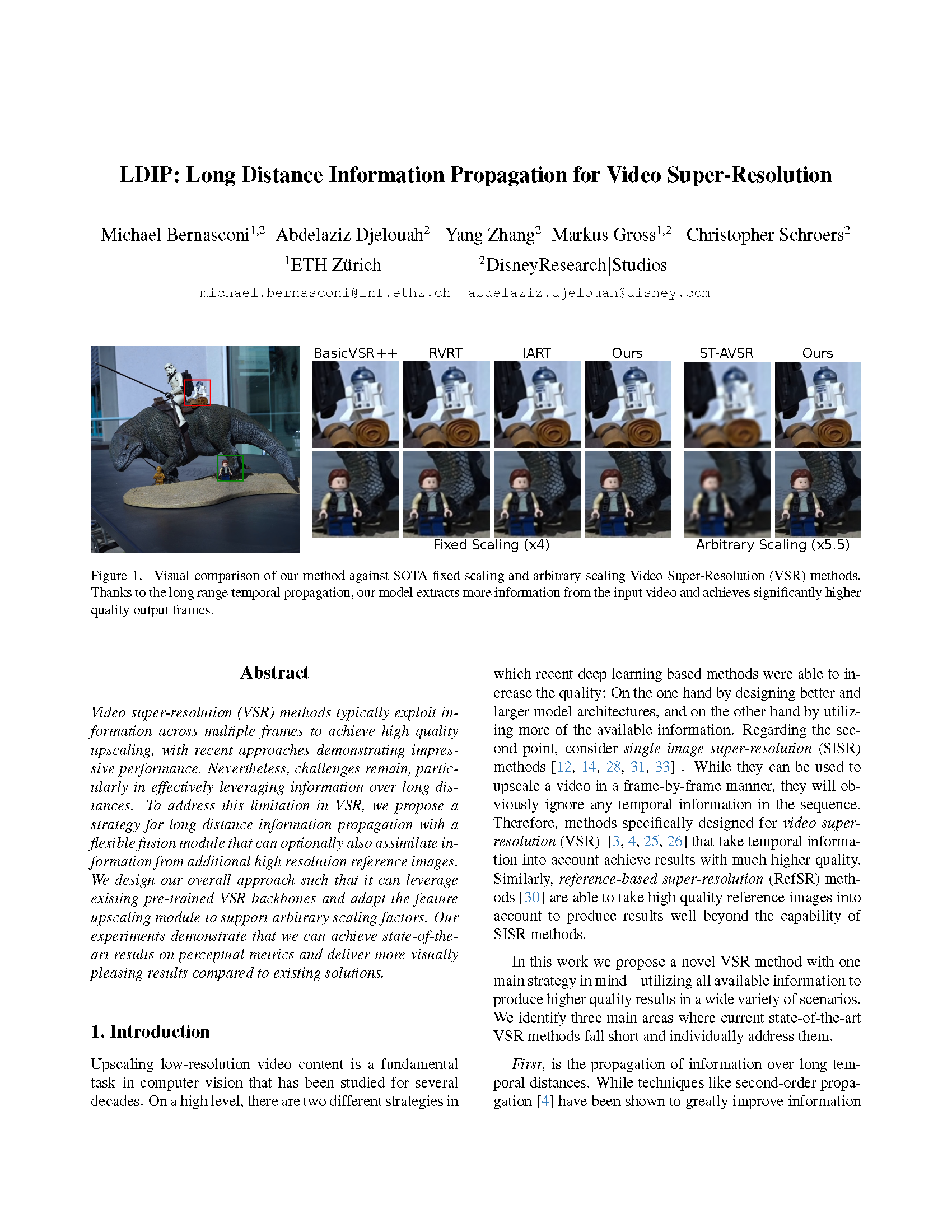

Video super-resolution (VSR) methods typically exploit information across multiple frames to achieve high quality upscaling, with recent approaches demonstrating impressive performance. Nevertheless, challenges remain, particularly in effectively leveraging information over long distances. To address this limitation in VSR, we propose a strategy for long distance information propagation with a flexible fusion module that can optionally also assimilate information from additional high resolution reference images. We design our overall approach such that it can leverage existing pre-trained VSR backbones and adapt the feature upscaling module to support arbitrary scaling factors. Our experiments demonstrate that we can achieve state-of-the-art results on perceptual metrics and deliver more visually pleasing results compared to existing solutions.