Practical Dynamic Facial Appearance Modeling and Acquisition

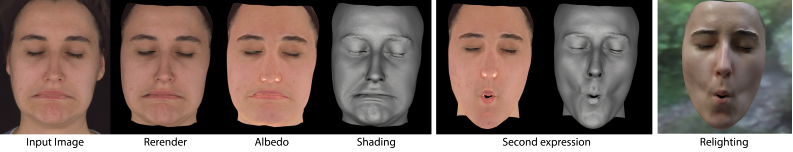

We present a method to acquire dynamic properties of facial skin appearance, including dynamic diffuse albedo encoding blood flow, dynamic specular intensity, and per-frame high resolution normal maps for a facial performance sequence.

November 27, 2018

ACM SIGGRAPH Asia 2018

Authors

Paulo Gotardo (Disney Research)

Jeremy Riviere (Disney Research)

Derek Bradley (Disney Research)

Abhijeet Ghosh (Imperial College London)

Thabo Beeler (Disney Research)

Practical Dynamic Facial Appearance Modeling and Acquisition

The method reconstructs these maps from a purely passive multi-camera setup, without the need for polarization or requiring temporally multiplexed illumination. Hence, it is very well suited for integration with existing passive systems for facial performance capture. To solve this seemingly underconstrained problem, we demonstrate that albedo dynamics during a facial performance can be modeled as a combination of: (1) a static, high-resolution base albedo map, modeling full skin pigmentation; and (2) a dynamic, one-dimensional component in the CIE L*a*b* color space, which explains changes in hemoglobin concentration due to blood flow. We leverage this albedo subspace and additional constraints on appearance and surface geometry to also estimate specular reflection parameters and resolve high-resolution normal maps with unprecedented detail in a passive capture system. These constraints are built into an inverse rendering framework that minimizes the difference of the rendered face to the captured images, incorporating constraints from multiple views for every texel on the face. The presented method is the first system capable of capturing high-quality dynamic appearance maps at full resolution and video framerates, providing a major step forward in the area of facial appearance acquisition.