Multimodal Conditional 3D Face Geometry Generation

In this work, we present a new method for multimodal conditional 3D face geometry generation that allows user-friendly control over the output identity and expression via a number of different conditioning signals

October 26, 2025

Shape Modeling International (SMI) (2025)

Authors

Christopher Otto (ETH Zurich, DisneyResearch|Studios)

Prashanth Chandran (DisneyResearch|Studios)

Sebastian Weiss (DisneyResearch|Studios)

Markus Gross (ETH Zurich, DisneyResearch|Studios)

Gaspard Zoss (DisneyResearch|Studios)

Derek Bradley (DisneyResearch|Studios)

Multimodal Conditional 3D Face Geometry Generation

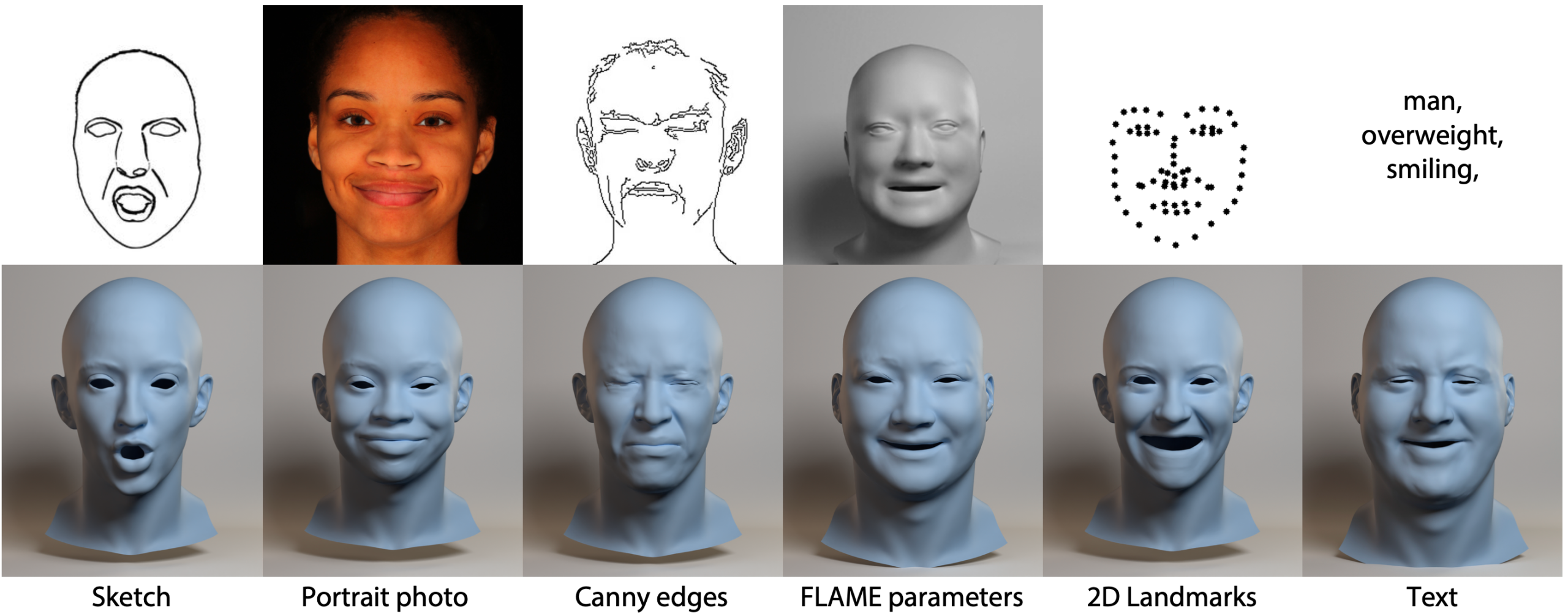

We present a new method for multimodal conditional 3D face geometry generation that allows user-friendly control over the output identity and expression via a number of different conditioning signals. Within a single model, we demonstrate 3D faces generated from artistic sketches, portrait photos, Canny edges, FLAME face model parameters, 2D face landmarks, or text prompts. Our approach is based on a diffusion process that generates 3D geometry in a 2D parameterized UV domain. Geometry generation passes each conditioning signal through a set of cross-attention layers (IP-Adapter), one set for each user-defined conditioning signal. The result is an easy-to-use 3D face generation tool that produces topology-consistent, high-quality geometry with fine-grain user control.